Working with versioned buckets

Not that sort of bucket.

But what do we mean by versioned buckets and how did I end up with one anyway?

On an S3 service, most people are aware that the first thing they do is create a bucket to store their objects.

One of the main uses that people are using the vBridge S3 Service today is a Veeam Scale-Out Backup Repository (SOBR) Capacity Tier. One of the cool features that comes with that is the ability to set Immutability or Object Lock and have forced retention of backup data. Meaning that you or the bad guys, or vBridge for that matter, cannot remove your important data.

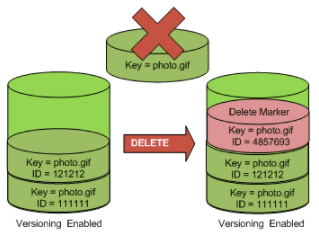

One of the side-effects of turning that feature on is that it turns on a bucket feature called versioning.

In short this means that every version of the Object is kept and retained. And when you delete an object, it does not actually delete the object. It merely places a delete marker in front of the object and your data is still there.

This is great, because if someone comes in and accidentally deletes any object, then it's still there. You just have to refer to the specific previous version.

But to a basic S3 browser, or someone doing awscli list-objects-v2, then you may be forgiven for thinking that there is nothing there.

Again, great. But what happens when I really want to delete that data? e.g. I have decided that I really want to get rid of all the objects in the bucket - just delete everything? You can't delete a bucket while it still has contents, and the online docs show the basic way on the CLI is something like:

Import-Module AWSPowerShell

Get-S3Object -ProfileName MyNewProfile -EndpointUrl 'https://s3-chc.mycloudspace.co.nz' mybucketname | Remove-S3Object -ProfileName MyNewProfile -EndpointUrl 'https://s3-chc.mycloudspace.co.nz' -BucketName mybucketname -ForceGet all the Objects and pipe them through the remove object command. Job done? Not so fast. All you are doing here is adding delete markers to the objects. Your object count, storage space and therefore cost of storage will not drop. And you still can't delete the bucket.

In the ideal world, the software that created the versions and knows about the data, would be able to purge the bucket (Here's looking at you Veeam), but if you delete or remove a SOBR from Veeam it just removes the config from the Veeam side and leaves it's mess behind in the S3 bucket for someone else to clean up.

There's some good comments online about how you go about deleting data, but some of those are either hard to read, complex, or don't handle large numbers of Objects.

Below is a sample script that uses the aws cli commands to read the versions, and then delete the versions and delete markers.

Note that this is not efficient and will be too slow if you have hundreds of million of objects. A better way is to bundle responses up and minimise api calls by using e.g. delete-objects instead of delete-object and getting the right number of objects returned per call.

This script is intended for clarity to show the steps needed.

#Delete all versions of all Objects and any delete markers in a version controlled bucket.

#Note that if immutablity (Object Lock) set, then we still need to be past retention time.

#see: https://stackoverflow.com/questions/29809105/how-do-i-delete-a-versioned-bucket-in-aws-s3-using-the-cli

#We need aws cli setup with profile holding access keys

$nt = "0"

$endloop=0

#Use $max as number of objects per loop

$max=50

$endpoint = "https://s3-chc.mycloudspace.co.nz"

$profile = "MyAWSProfile"

$bucket = "mybucket"

#List-objects returns a max of 1000 items. Loopround while we still have versions to go

while ($endloop -ne 1) {

#Use list-object-versions not list-objects-v2!

$k = aws s3api --endpoint-url $endpoint list-object-versions --profile $profile --bucket $bucket --max-items $max --starting-token $nt | ConvertFrom-Json

$nt= $k.NextToken

#list-object-versions returns a structure containing an array of object-versions, an array of DeleteMarkers and maybe a NextToken.

#If $nt not defined we are at the end of the list. Exit loop

if ([string]::IsNullOrWhiteSpace($nt)) {

$endloop=1

}

##Pass 1: Delete the versions. The number of Versions returned is limited to the max-items set above.

$obj = $k.Versions | ForEach-Object {

$key = $_.Key

$ver = $_.VersionID

write-host "Deleting Version: $key - $ver"

#For clarity in script here, just delete one at a time

#NOTE that you are specifying BOTH the object ID and the version ID to permanently delete the item.

$r = aws s3api --endpoint-url $endpoint --profile $profile delete-object --bucket $bucket --key $key --version-id $ver --bypass-governance-retention | ConvertFrom-Json

}

##Pass 2: Delete the Delete Markers. Note there might be a LOT more DeleteMarkers than Versions....

$obj = $k.DeleteMarkers | ForEach-Object {

$key = $_.Key

$ver = $_.VersionID

write-host "Deleting DeleteMarker: $key - $ver"

$r = aws s3api --endpoint-url $endpoint --profile $profile delete-object --bucket $bucket --key $key --version-id $ver --bypass-governance-retention | ConvertFrom-Json

}

}To make it 'production ready'. I'd add in checking of all the return codes to make sure items were actually deleted as expected, plus a lot more sanity checking and logging. And if you run the above on something with 100 million plus Objects, you will be waiting several years for it to complete, so parallelism and batching is a must. But in order to explain the concept - got to keep it simple for now.