How much cloud is 'just right'?

There are many good reasons for businesses to move operations to the cloud. Ease of use, access to complex services, pricing etc. But isn't the cloud just "someone else's computer"?

In March headlines were made due to Microsoft AD global outage.

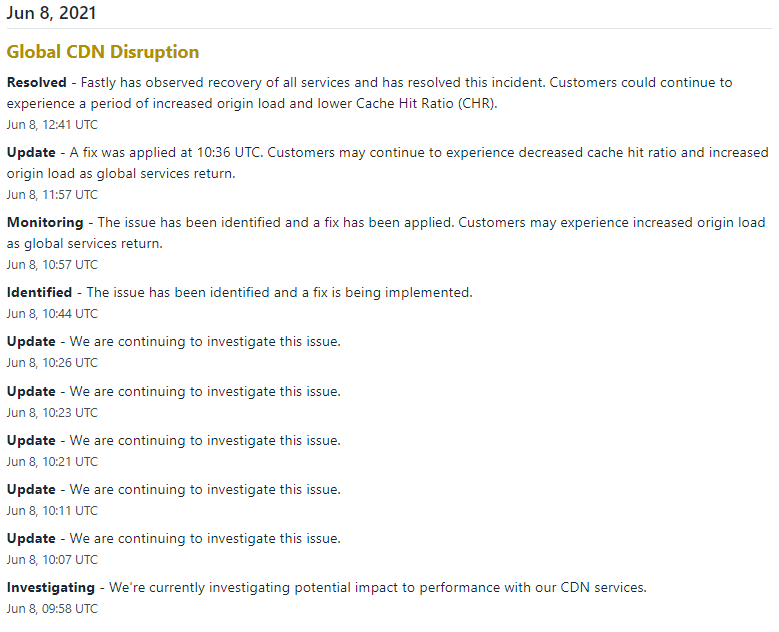

In June Fastly suffered an outage. Reported in the mainstream media as a 'Global Internet Outage'. Well it wasn't really an internet outage at all, it was one massive service on top of it.

The fact that Fastly communicated well on their status page and resolved the issue in about an hour is all credit to them.

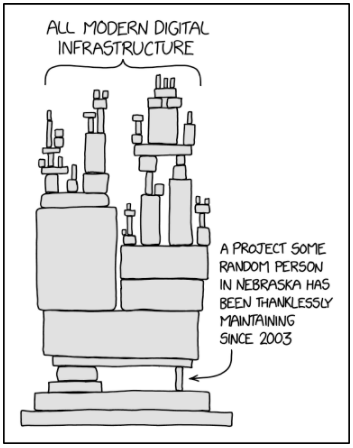

The internet's success is due to it's decentralized nature and a collaborative system of independent networks. Things start to unravel when systems are built on top of single systems or platforms on top of that.

But any business who outsource their services, does not absolve them of the responsibility for the availability of their systems that depend on them.

There is always going to be a trade off. What's just the right amount of complexity?

Certainly you may not want to run everything in house. Yes, that would give you ultimate control and responsibility, but at a scale that may struggle to procure sufficiently resilient hardware, along with the in house skills to operate it. You will be faced with a different set of challenges.

Pushing everything to third party systems means you need to do sufficient due diligence on those platforms. You are likely only one of thousands of customers sharing that service.

Those platforms, by their very nature, are massively scalable. And that scalability comes with a price. Yes there are a bunch of smart people behind them, but there are a bunch of complex systems behind them to keep those running (Automated CI/CD orchestration platforms, modern technologies like Kubernetes). They do tend to work very well, and when they work, they deliver outstanding results. But when they do fail, they can fail in spectacular and unexpected ways. Ways that are also difficult for humans to understand and the level of knowledge required to fix is very high.

There is something to be said for the K.I.S.S principle. Many enterprises do not need to go to the level of complexity that these hyperscalers do, and by using those services you trust that they have their systems in hand. I'm sure Fastly are happy with their SLA, but when something like this hits the media, then you can see this issue with all the eggs in one basket.

No matter how reliable that service is, it becomes a single point of failure.

I like to think that there is a happy medium without going to that level of complexity. Something like the Goldilocks zone. Where everything is just right.

At vBridge we design our core platforms so there is no known single point of failure, Every system has redundant or clustered components with multiple paths. This means we can deliver a very reliable platform without relying on overly complex solutions. We can react and recover as issues occur. We believe we have sufficient scale and expertise to address the issue that on-prem deployments have and we can leverage multiple NZ scale SME solutions to something where we can use world leading solutions.

Does this mean we will never have an outage? Of course not. Technology will find a way to way to fail in a way we have never though of.

Every business needs to find their own Goldilocks zone. In this day and age it is not black and white. There's a sliding scale of Edge to Cloud.