Backup Github to S3 Object Storage using Workflows

Want a simple way to back your Github repository to object storage? Easier than ever with Github actions and vBridge object storage platform, aka Indelible Data.

Things you will need

- Github repository

- Object storage account, bucket and credentials (check out this post to setup via MyCloudSpace)

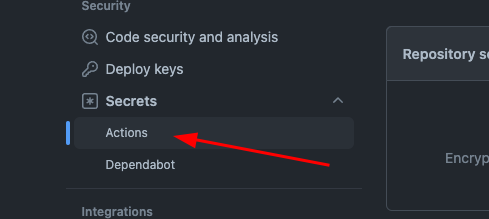

Configure Github Secrets

Under the repository settings you want to hit Security > Secrets > Actions

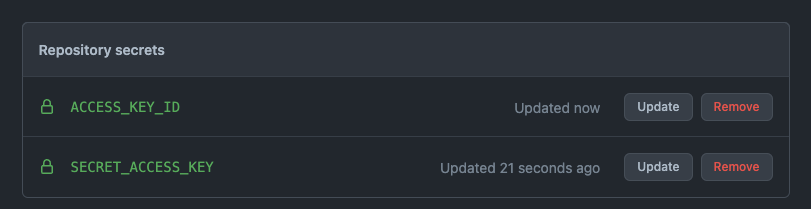

Now, add two secrets ACCESS_KEY_ID and SECRET_ACCESS_KEY with the values from your object storage tenant in MyCloudSpace that has access to your bucket where you want to run your backups.

It should look like this once you are finished.

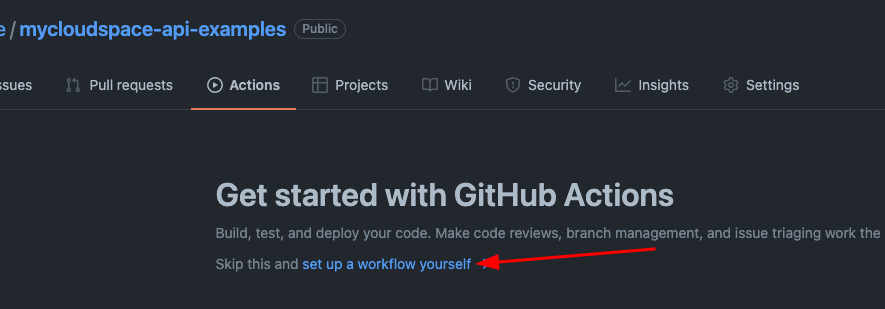

Configure Github Workflow

We now need a workflow, hit your Actions tab under the repository - then the set up a workflow yourself.

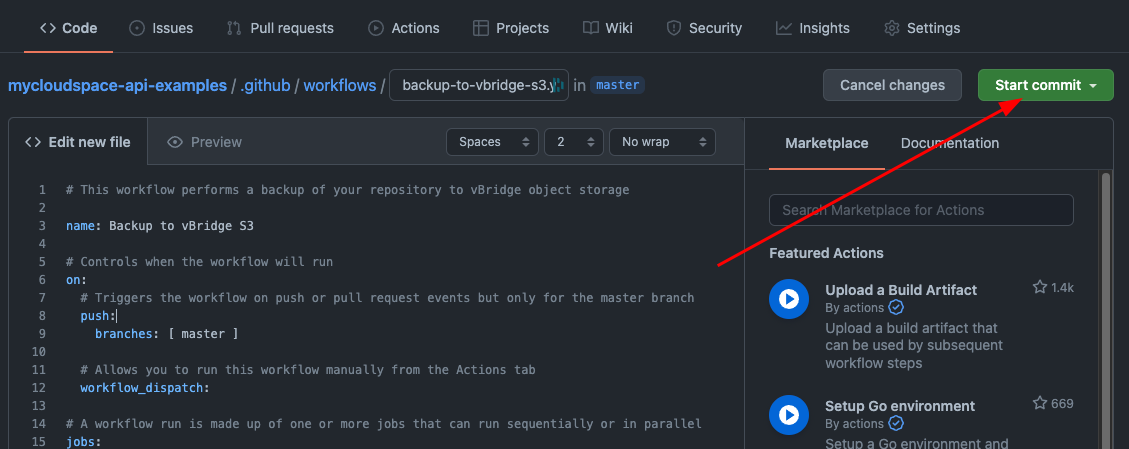

This opens up a code editor, give it the file name of backup-to-vbridge-s3.yaml and paste the following code into the editor.

Replace out the MIRROR_TARGET (line 31) with the name of the bucket you created followed by the name of the sub folder you want to backup into such as bucketname/project-folder

# This workflow performs a backup of your repository to vBridge object storage

name: Backup to vBridge S3

# Controls when the workflow will run

on:

# Triggers the workflow on push or pull request events but only for the master branch

push:

branches: [ master ]

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

# A workflow run is made up of one or more jobs that can run sequentially or in parallel

jobs:

# This workflow contains a single job called "build"

build:

# The type of runner that the job will run on

runs-on: ubuntu-latest

# Steps represent a sequence of tasks that will be executed as part of the job

steps:

- uses: actions/checkout@v2

with:

fetch-depth: 0

- name: S3 Backup

uses: peter-evans/s3-backup@v1.0.2

env:

ACCESS_KEY_ID: ${{ secrets.ACCESS_KEY_ID }}

SECRET_ACCESS_KEY: ${{ secrets.SECRET_ACCESS_KEY }}

MIRROR_TARGET: your-bucket-name-here/your-folder

STORAGE_SERVICE_URL: https://s3-akl.mycloudspace.co.nz

with:

args: --overwrite --remove

The STORAGE_SERVICE_URL should also be changed to the location you provisioned the bucket, in this case its the Auckland region, but could be s3-chc.mycloudspace.co.nz for the Christchurch region.

The secrets you added are injected to the workflow / action from the secrets you created the step prior.

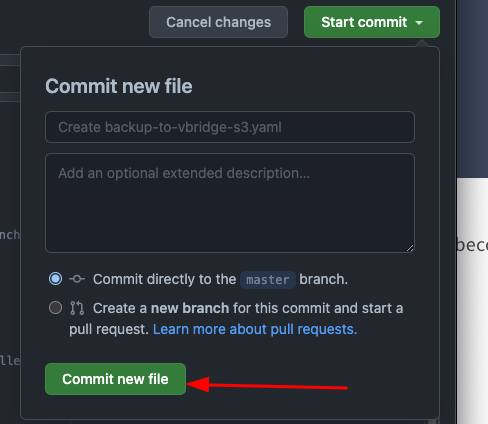

You can now start the commit, hit the big green button,

And then commit new file, you can enter in a commit message if required and or/change what branch this is committed into, master in my case.

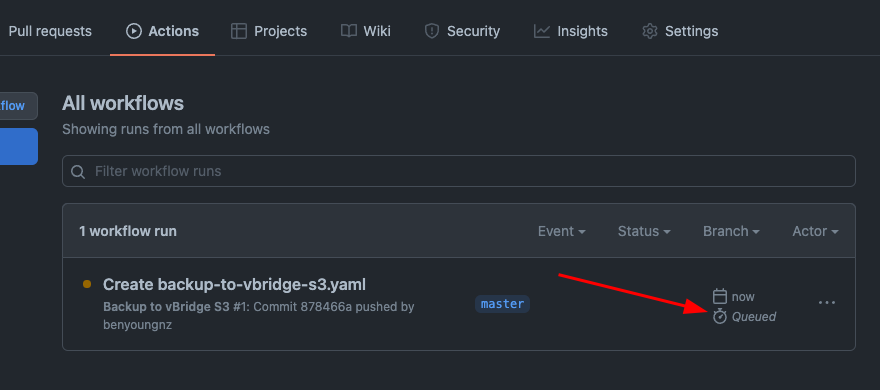

Your workflow will now be queued up, and will run immediately, progress can be seen from the actions tab.

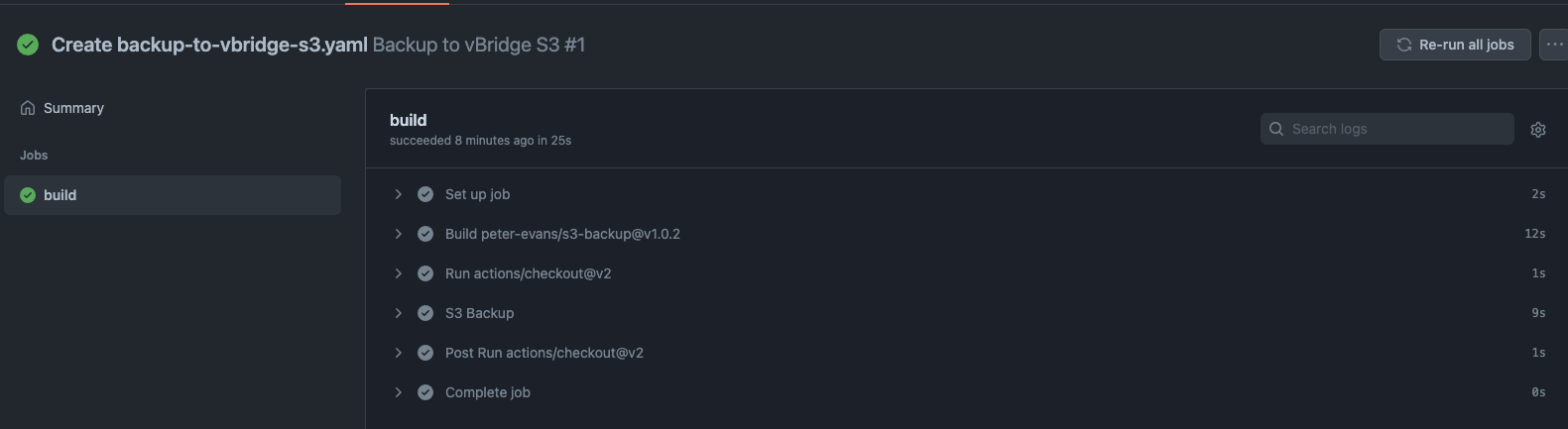

Once run you can click into view the workflow output any failures will show log output for you to diagnose, my one ran through without issue.

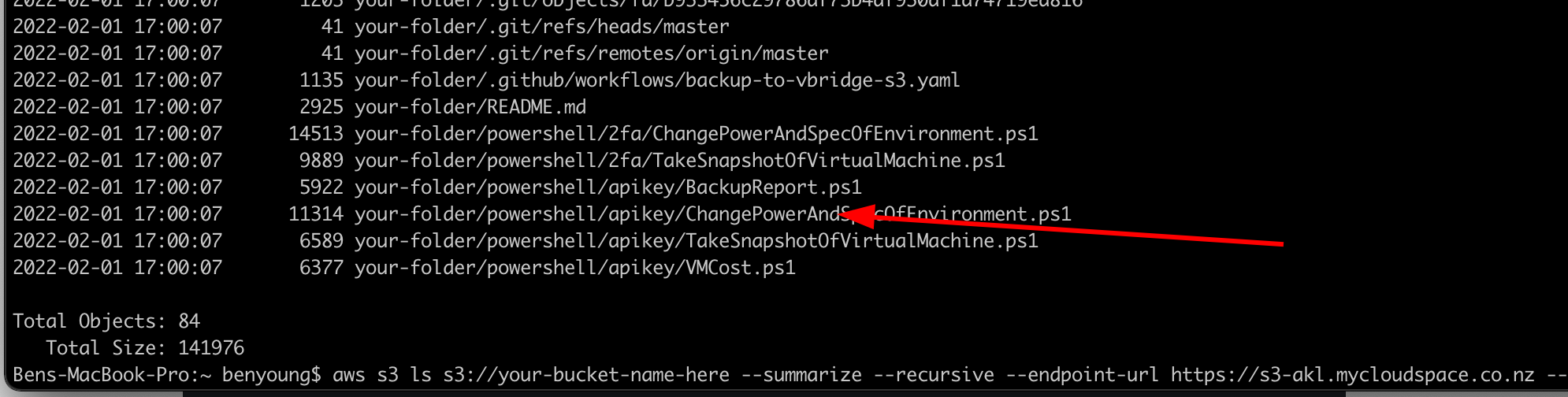

Looking at the bucket now I can see the code and it history mirrored across into my object bucket.

Now when you check code in, this workflow will run and back up to the object account. Easy as that, ready for if you need it such as a service outage...